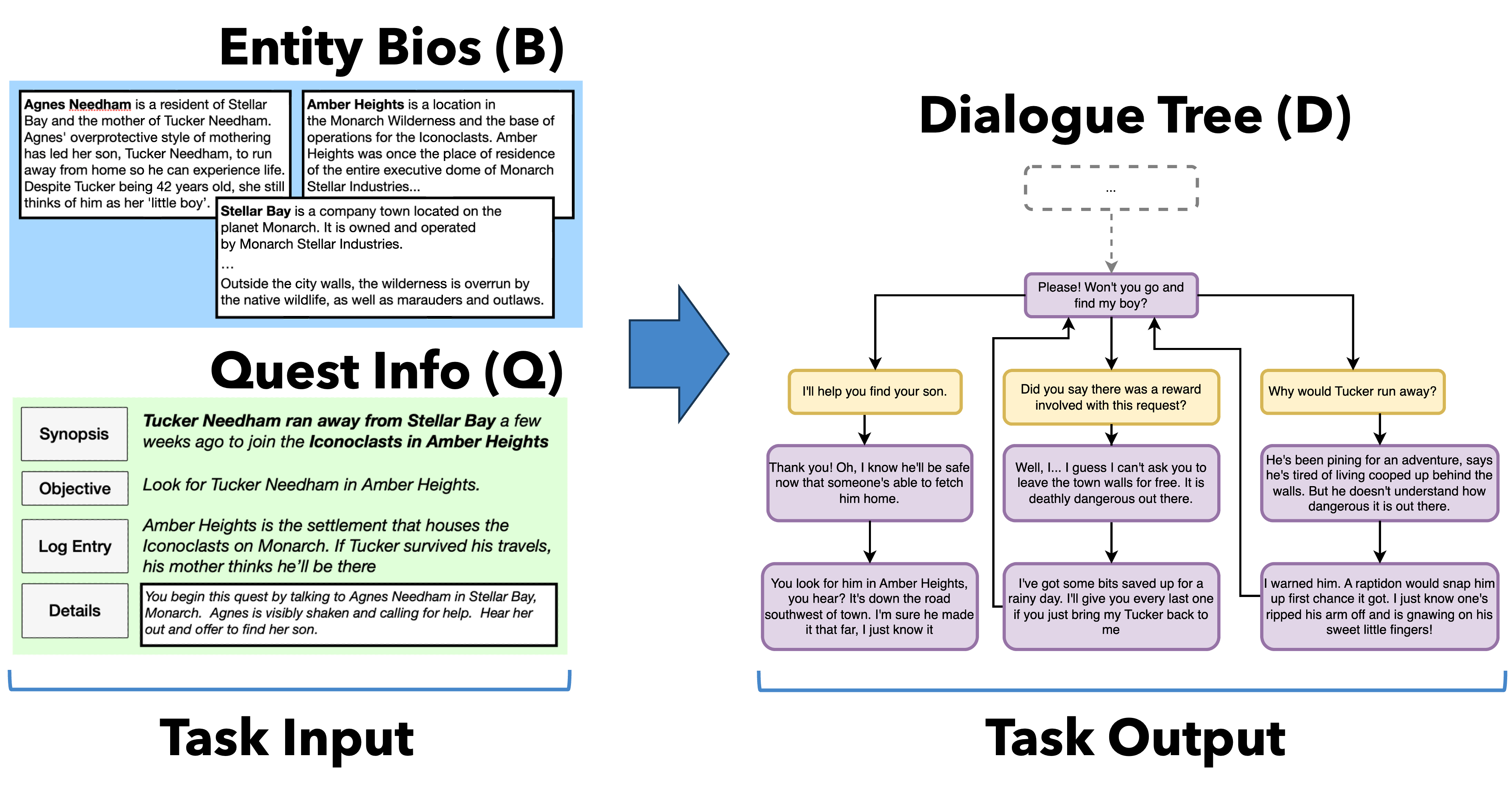

Nathaniel Weir

I am an Applied Scientist at Amazon Web Services. I recently completed my PhD at the Center for Language and Speech Processing at Johns Hopkins University, where I research natural language processing and artificial intelligence. I was advised by Benjamin Van Durme and supported by an NSF Graduate Research Fellowship.

I have interned with the Aristo reasoning team at The Allen Institute for Artificial Intelligence under Peter Clark. I also interned with Microsoft Semantic Machines under Harsh Jhamtani and with the Deep Learning & Language group at Microsoft Research under Marc-Alexandre Cote and Eric Yuan.

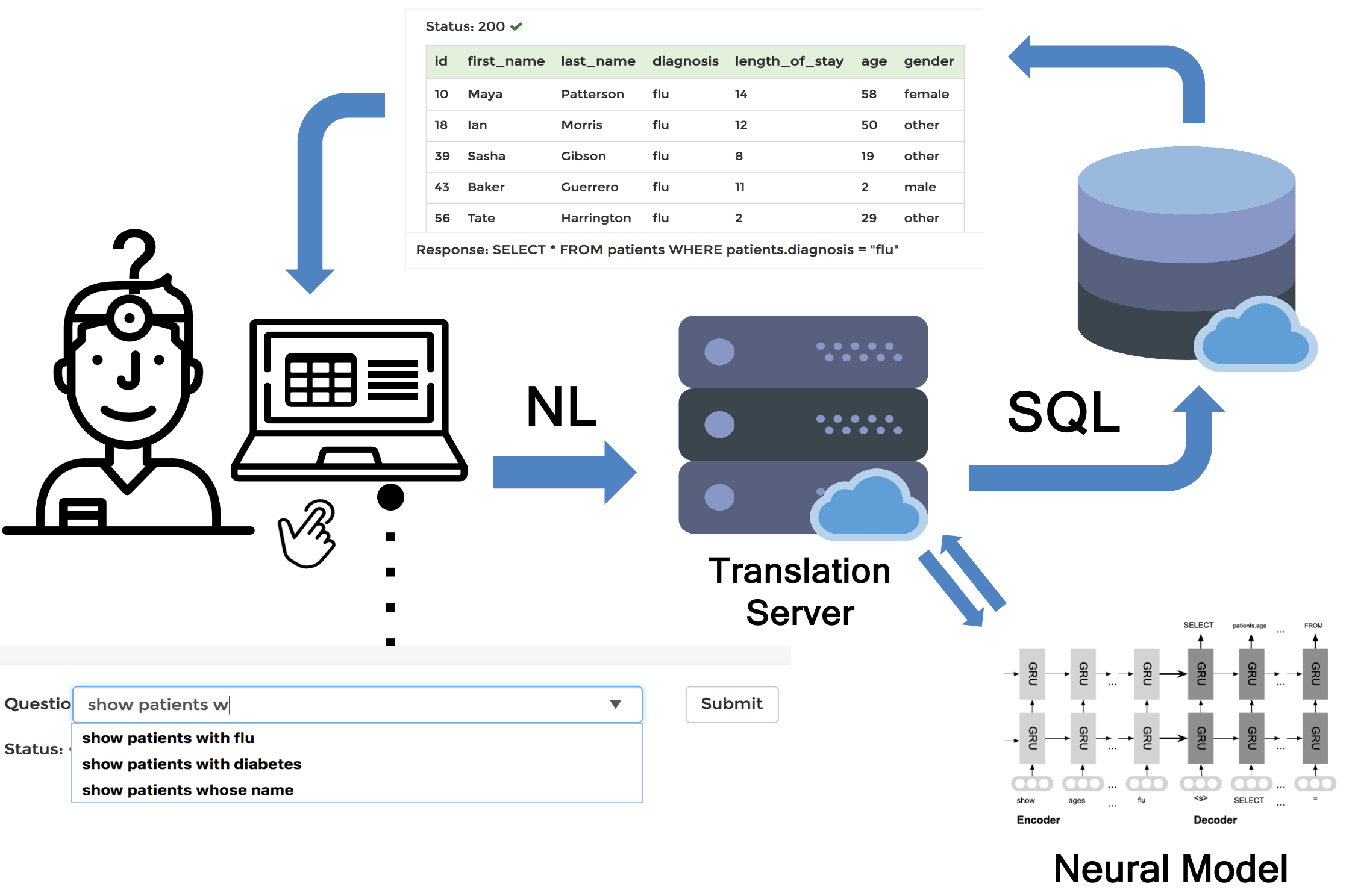

As an undergraduate at Brown University, I worked with Ugur Cetintemel and Carsten Binnig in the Database Group, where we built of the first neural approaches to parsing natural language into SQL.

My research interests include:

- Neuro-symbolic verification: using formal methods and verifiable rewards to validate LLM reasoning, enabling trustworthy AI through logical consistency checks and scalable synthetic reasoning environments

- Neuro-symbolic reasoning: combining neural and classical symbolic reasoning to build interpretable, logical, and trustworthy systems

- Natural language generation: creating methods for generating diverse, coherent, and contextually appropriate text according to complex constraints

- Question answering: building systems that can answer complex questions about the world by reasoning carefully over large amounts of text

- Semantic parsing: developing methods for translating natural language into logical forms that can be executed by a computer

Projects I have worked on (click to expand):

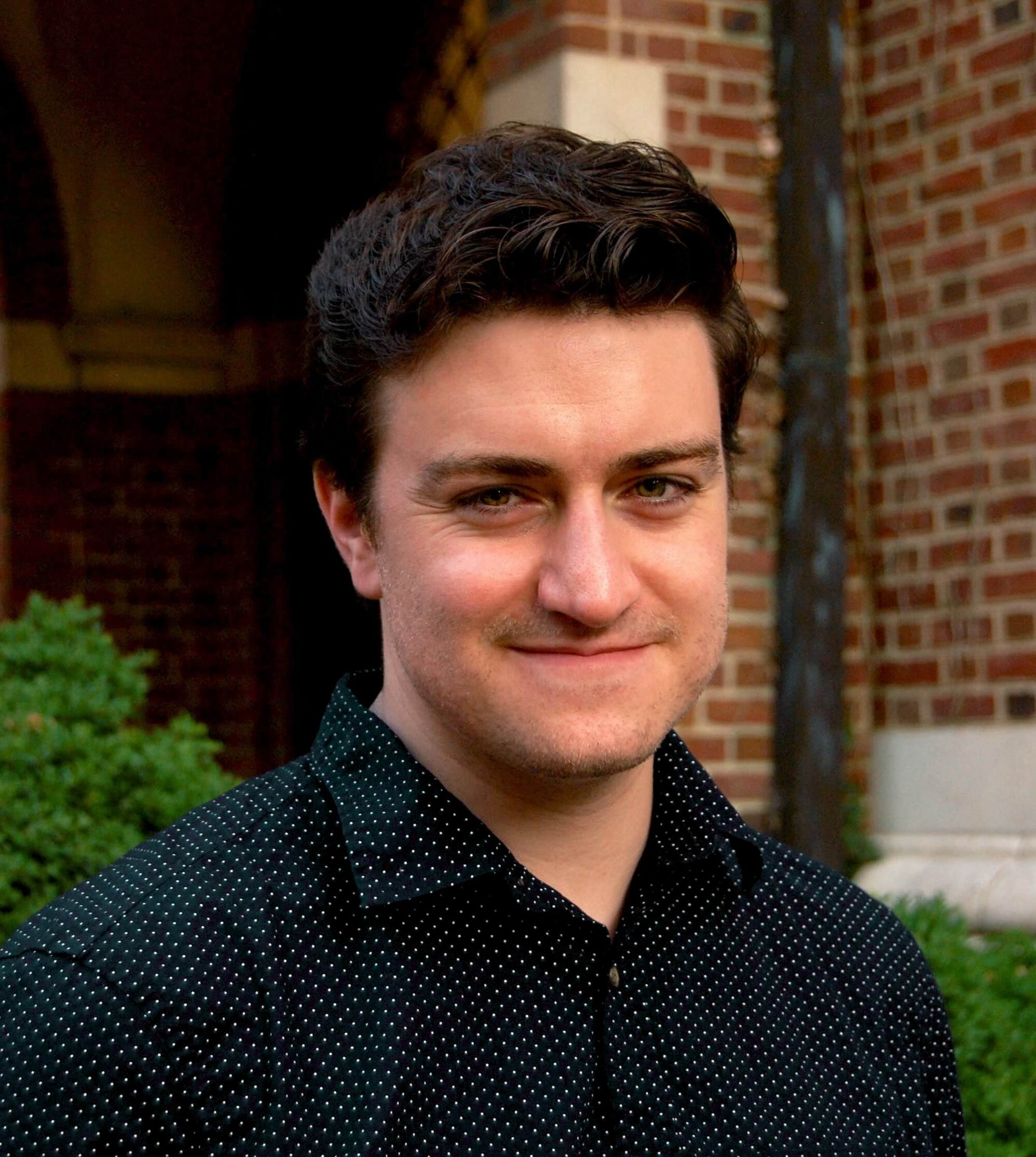

Neuro-symbolic learning and verification

My recent work at AWS involves making LLM reasoning more capable and trustworthy through formal verification, scalable training environments, and other forms of neuro-symbolic integration.

Sam Bayless, Stefano Buliani, Darion Cassel, Byron Cook, ..., Nathaniel Weir, Michael W. Whalen, Jianan Yao. A Neurosymbolic Approach to Natural Language Formalization and Verification. preprint.

Yu Feng, Nathaniel Weir, Kaj Bostrom, Sam Bayless, Darion Cassel, Sapana Chaudhary, Benjamin Kiesl-Reiter, Huzefa Rangwala. VeriCoT: Neuro-symbolic Chain-of-Thought Validation via Logical Consistency Checks. ICLR 2026.

Andre He, Nathaniel Weir, Kaj Bostrom, Allen Nie, Darion Cassel, Sam Bayless, Huzefa Rangwala. ReSyn: Autonomously Scaling Synthetic Environments for Reasoning Models. preprint.

Jiefu Ou, Sapana Chaudhary, Kaj Bostrom, Nathaniel Weir, Shuai Zhang, Huzefa Rangwala, George Karypis. MaxCode: A Max-Reward Reinforcement Learning Framework for Automated Code Optimization. preprint.

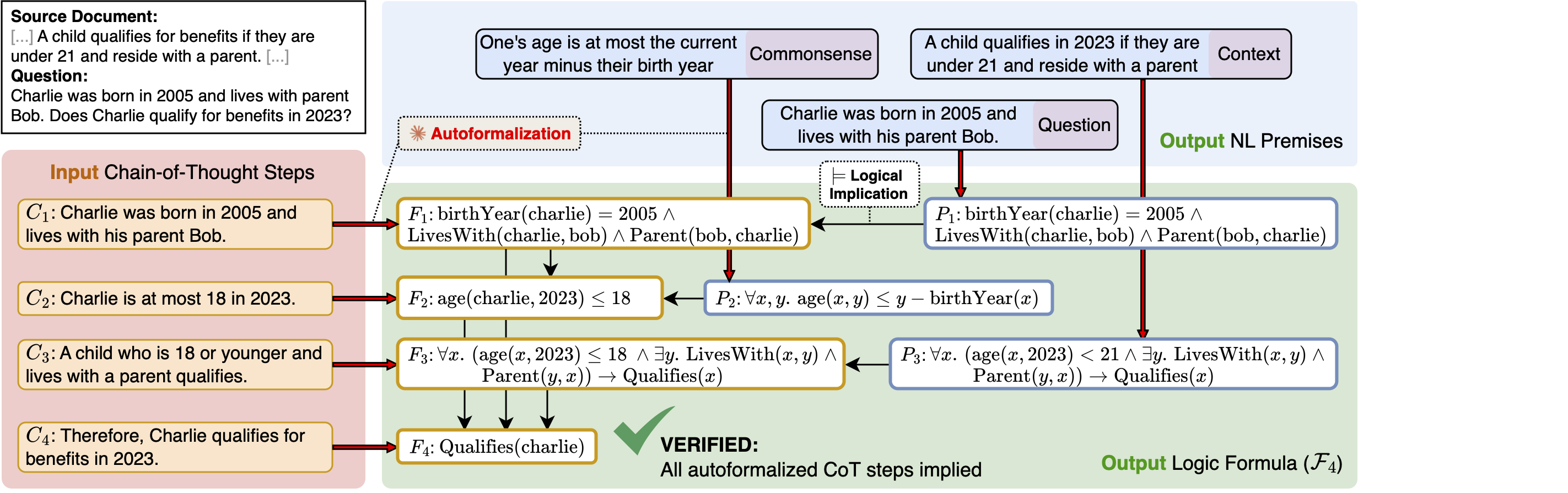

Neuro-symbolic reasoning as entailment tree search

Nathaniel Weir, Kate Sanders, Orion Weller, Shreya Sharma, Dongwei Jiang, Zhengping Zhang, Bhavana Dalvi Mishra, Oyvind Tafjord, Peter Jansen, Peter Clark, Benjamin Van Durme. Enhancing Systematic Decompositional Natural Language Inference Using Informal Logic. EMNLP 2024.

Nathaniel Weir, Bhavana Dalvi Mishra, Orion Weller, Oyvind Tafjord, Sam Hornstein, Alexander Sabol, Peter Jansen, Benjamin Van Durme, and Peter Clark. From Models to Microtheories: Distilling a Model's Topical Knowledge for Grounded Question Answering. ICLR 2025.

Nathaniel Weir, Peter Clark and Benjamin Van Durme. NELLIE: A Neuro-Symbolic Inference Engine for Grounded, Compositional, and Explainable Reasoning. IJCAI 2024.

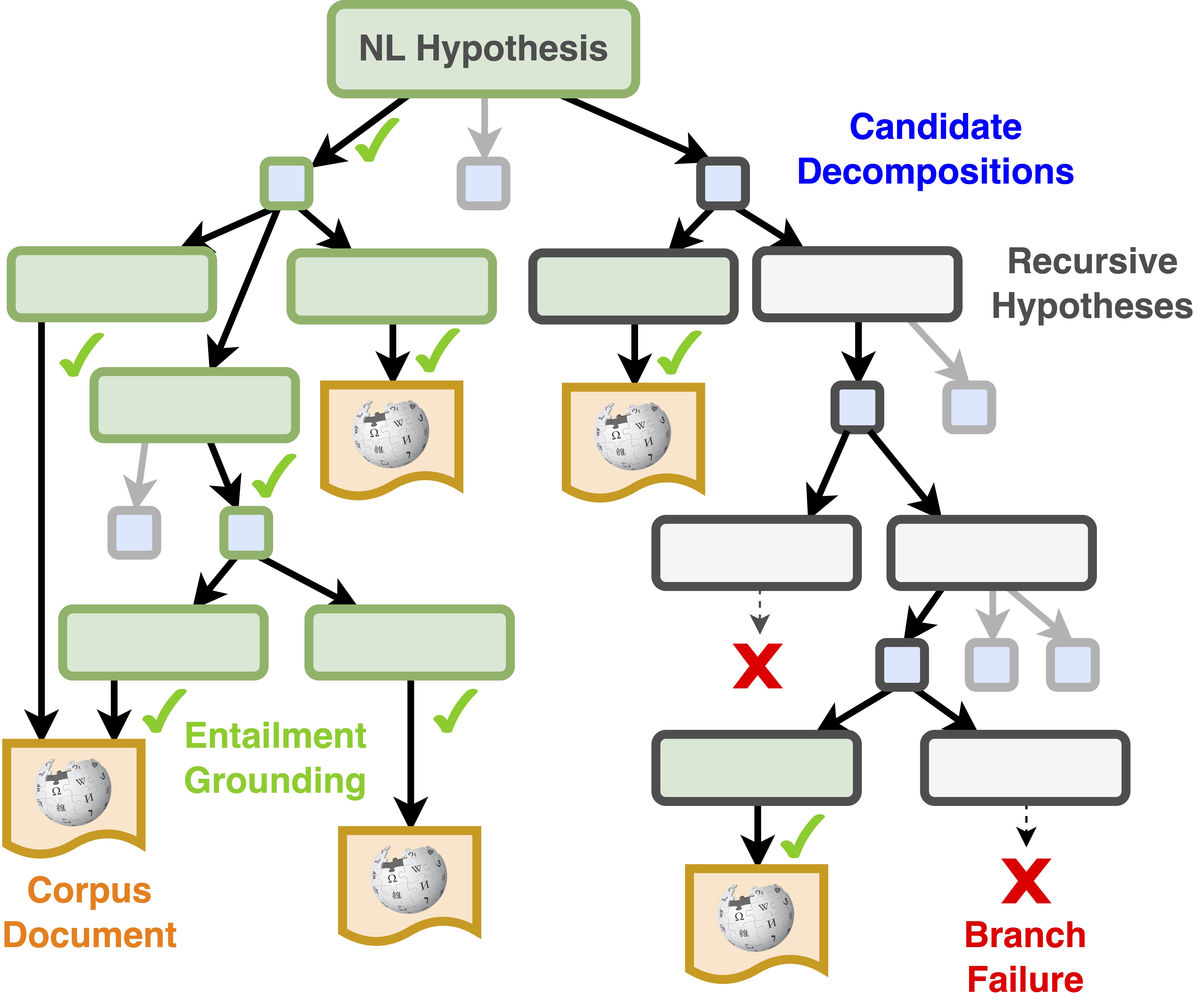

Ontology-constrained dialogue tree generation

Knowledge-guided natural language generation

Language-guided policy search for grounded agents

Nathaniel Weir, Xingdi Yuan, Marc-Alexandre Côté, Matthew Hausknecht, Romain Laroche, Ida Momennejad, Harm Van Seijen, and Benjamin Van Durme. One-Shot Learning from a Demonstration with Hierarchical Latent Language. AAMAS 2023.

Semantic probing of neural language models

Neural semantic parsing

Weir, N. (2019). Bootstrapping Generalization in Neural Text-to-SQL Semantic Parsing Models.

Weir, N. et al (2020). DBPal: A Fully Pluggable NL2SQL Training Pipeline. SIGMOD.

Full List of Publications:

Andre He, Nathaniel Weir, Kaj Bostrom, Allen Nie, Darion Cassel, Sam Bayless, Huzefa Rangwala. ReSyn: Autonomously Scaling Synthetic Environments for Reasoning Models. preprint.

Kate Sanders, Nathaniel Weir, Sapana Chaudhary, Kaj Bostrom, Huzefa Rangwala. Generating Data-Driven Reasoning Rubrics for Domain-Adaptive Reward Modeling. preprint.

Vikash Singh, Darion Cassel, Nathaniel Weir, Nick Feng, Sam Bayless. VERGE: Formal Refinement and Guidance Engine for Verifiable LLM Reasoning. preprint.

Jiefu Ou, Sapana Chaudhary, Kaj Bostrom, Nathaniel Weir, Shuai Zhang, Huzefa Rangwala, George Karypis. MaxCode: A Max-Reward Reinforcement Learning Framework for Automated Code Optimization. preprint.

Sam Bayless, Stefano Buliani, Darion Cassel, Byron Cook, ..., Nathaniel Weir, Michael W. Whalen, Jianan Yao. A Neurosymbolic Approach to Natural Language Formalization and Verification. preprint.

Yu Feng, Nathaniel Weir, Kaj Bostrom, Sam Bayless, Darion Cassel, Sapana Chaudhary, Benjamin Kiesl-Reiter, Huzefa Rangwala. VeriCoT: Neuro-symbolic Chain-of-Thought Validation via Logical Consistency Checks. ICLR 2026.

Nathaniel Weir, Bhavana Dalvi Mishra, Orion Weller, Oyvind Tafjord, Sam Hornstein, Alexander Sabol, Peter Jansen, Benjamin Van Durme, and Peter Clark. From Models to Microtheories: Distilling a Model's Topical Knowledge for Grounded Question Answering. ICLR 2025.

Nathaniel Weir, Muhammad Khalifa, Linlu Qiu, Orion Weller, and Peter Clark. Learning to Reason via Program Generation, Emulation, and Search. NeurIPS 2024.

Zhengping Jiang, Jingyu Zhang, Nathaniel Weir, Seth Ebner, Miriam Wanner, Kate Sanders, Daniel Khashabi, Anqi Liu, Benjamin Van Durme. Core: Robust Factual Precision Scoring with Informative Sub-Claim Identification. Findings of ACL 2025.

Nathaniel Weir, Kate Sanders, Orion Weller, Shreya Sharma, Dongwei Jiang, Zhengping Zhang, Bhavana Dalvi Mishra, Oyvind Tafjord, Peter Jansen, Peter Clark, Benjamin Van Durme. Enhancing Systematic Decompositional Natural Language Inference Using Informal Logic. EMNLP 2024.

Kate Sanders, Nathaniel Weir, and Benjamin Van Durme. TV-TREES: Multimodal Entailment Trees for Neuro-Symbolic Video Reasoning . EMNLP 2024.

Nathaniel Weir, Ryan Thomas, Randolph d'Amore, Kellie Hill, Benjamin Van Durme, and Harsh Jhamtani. Ontologically Faithful Generation of Non-Player Character Dialogues. EMNLP 2024.

Nathaniel Weir, Peter Clark and Benjamin Van Durme. NELLIE: A Neuro-Symbolic Inference Engine for Grounded, Compositional, and Explainable Reasoning. IJCAI 2024.

Dongwei Jiang, Jingyu Zhang, Orion Weller, Nathaniel Weir, Benjamin Van Durme, Daniel Khashabi. SELF-[IN]CORRECT: LLMs Struggle with Refining Self-Generated Responses. AAAI 2025.

Orion Weller, Marc Marone, Nathaniel Weir, Dawn Lawrie, Daniel Khashabi, and Benjamin Van Durme. “According to ...” Prompting Language Models Improves Quoting from Pre-Training Data.. EACL 2024.

Orion Weller, Aleem Khan, Nathaniel Weir, Dawn Lawrie, and Benjamin Van Durme. Defending Against Poisoning Attacks in Open-Domain Question Answering. EACL 2024.

Nathaniel Weir, Xingdi Yuan, Marc-Alexandre Côté, Matthew Hausknecht, Romain Laroche, Ida Momennejad, Harm Van Seijen, and Benjamin Van Durme. One-Shot Learning from a Demonstration with Hierarchical Latent Language. AAMAS 2023.

Jiefu Ou, Nathaniel Weir, Anton Belyy, Felix Yu, and Benjamin Van Durme. InFillmore: Frame-Guided Language Generation with Bidirectional Context. StarSem 2021.

Nathaniel Weir, Joao Sedoc and Benjamin Van Durme. COD3S: Diverse Generation with Discrete Semantic Signatures. EMNLP 2020.

Nathaniel Weir, Adam Poliak, and Benjamin Van Durme. Probing Neural Language Models for Human Tacit Assumptions. CogSci 2020 (video).

Nathaniel Weir, Prasetya Utama, Alex Galakatos, Andrew Crotty, Amir Ilkhechi, Shekar Ramaswamy, Rohin Bhushan, Nadja Geisler, Benjamin Hattasch, Steffen Eger, Carsten Binnig, Ugur Cetintemel. DBPal: A Fully Pluggable NL2SQL Training Pipeline. Proceedings of SIGMOD. 2020 Presented as talks at 2018 IBM AI Systems Day 2018 (slides) and North East Database Day 2019.

Nathaniel Weir. Bootstrapping Generalization in Neural Text-to-SQL Semantic Parsing Models. Undergraduate honors thesis, Brown University, Providence, RI 02912.

Fuat Basik, Benjamin Hattasch, Amir Ilkhechi, Arif Usta, Shekar Ramaswamy, Prasetya Utama, Nathaniel Weir, Carsten Binnig and Ugur Cetintemel. DBPal: A Learned NL-Interface for Databases Proceedings of SIGMOD (demo). 2018.

Prasetya Utama, Nathaniel Weir, Carsten Binnig, and Ugur Cetintemel, Voice-based Data Exploration: Chatting with your Database Proceedings of 2017 workshop on Search-Oriented Conversational AI. 2017

Teaching

I co-taught EN.601.470/670: Artificial Agents with Benjamin Van Durme.

I was a teaching assistant at Brown for

- CSCI1570: Design and Analysis of Algorithms: Fall 2018

- CSCI0220: Discrete Structures and Probability: Spring 2017, 2018